Research

We believe that better understanding of the brain plays a vital role in developing intelligent systems. Since rapid progress has been made in the artificial intelligence (AI) field, it is crucial to investigate the importance of the brain to come up with ideas that will accelerate and guide AI research. Our lab is interested in the following research areas but not limited.

-

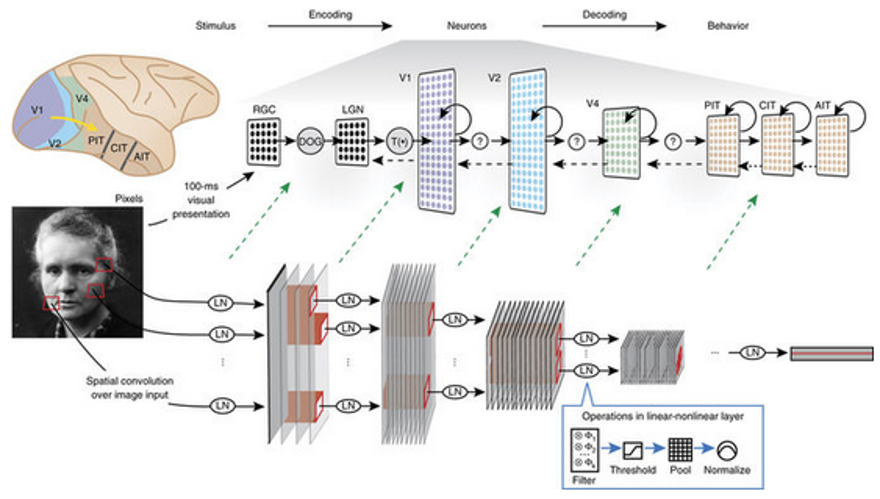

Brain-Inspired AI

Brain-Inspired AI is a field of artificial intelligence that seeks to design systems and algorithms modeled after the structure, function, and principles of the human brain and nervous system. The goal is to create AI that can learn, reason, perceive, and adapt in ways that are more similar to human intelligence, leveraging insights from neuroscience, psychology, and cognitive science. Brain-Inspired AI is applied in advanced robotics, autonomous systems, cognitive computing, lifelong learning, and neuromorphic computing, among others. These applications benefit from the adaptability, robustness, and human-like reasoning capabilities.

-

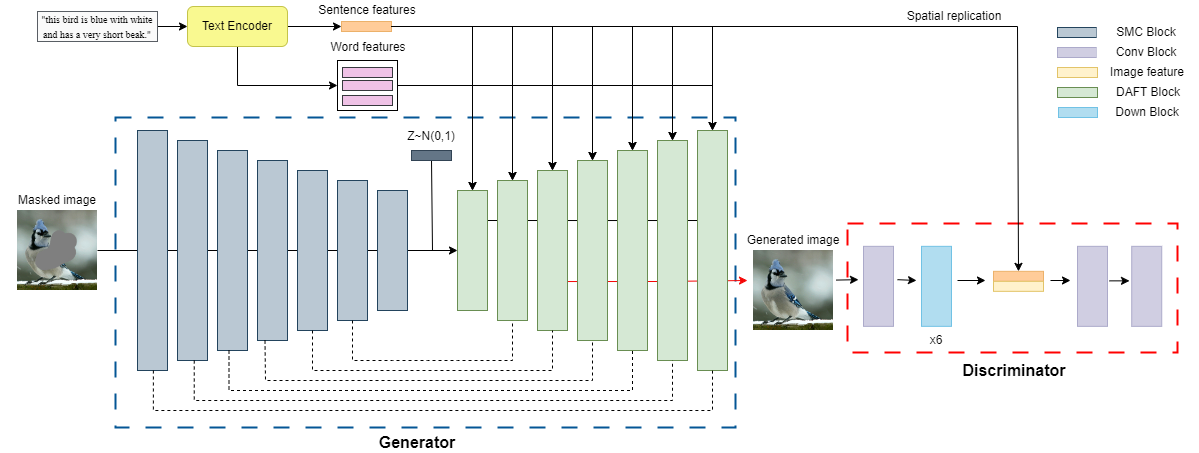

Generative AI

Generative AI is a branch of artificial intelligence focused on creating new content—such as text, images, audio, video, or code—by learning patterns from large datasets and then generating data that mimics or extends those patterns. Unlike traditional AI, which typically classifies, predicts, or analyzes existing data, generative AI produces entirely new data or media in response to prompts, often achieving results that closely resemble or even surpass human creativity.

-

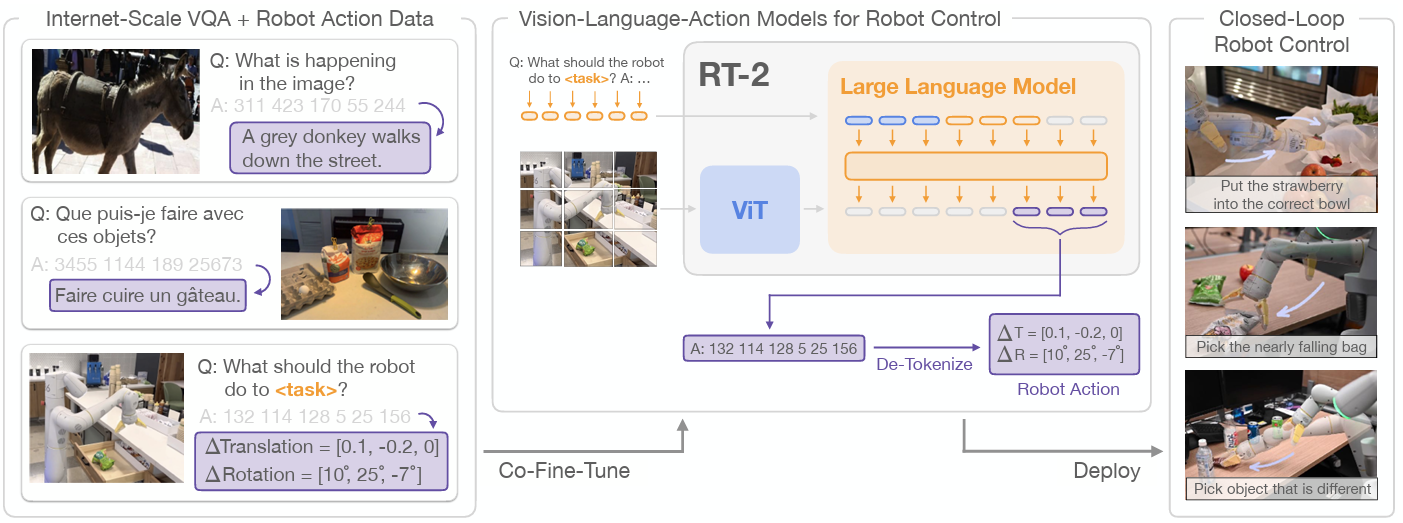

Physical AI

Physical AI is an emerging and transformative discipline that fuses artificial intelligence with robotics, enabling machines to not only process information but also perceive, reason, and act within the real, physical world. Unlike traditional AI—which is confined to software, digital data, and virtual environments—Physical AI is embodied in hardware systems such as robots, drones, autonomous vehicles, and smart devices that interact directly with their surroundings.